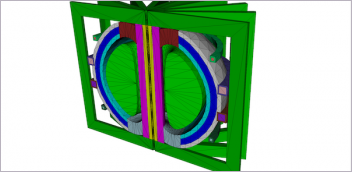

Best known as a manufacturer, GE also offers engineering HPC services to other companies. It is also researching the possible future use of quantum computers for simulation to calculate an “optimal” solution to complex problems now beyond solving to such a degree. Image courtesy of GE.

Latest News

January 31, 2023

On-demand high-performance (HPC) computing has become a mainstream option for solving the most demanding engineering simulations. When facing a decision between on-premise HPC and in-cloud, asking the right questions is key.

“Pay as you go is often not the cheapest solution,” says Rod Mach, president of TotalCAE, which manages HPC for engineering firms. They manage the client’s on-premises HPC and any in-cloud accounts they use.

Cloud usage is sometimes sold as “reserved cloud,” an environment reserved specifically for the purpose of running simulations. Cost savings vary by vendor.

Mach says most TotalCAE clients are doing a mix of “on-prem” and reserved cloud, also known as steady state. The reserved cloud option can deliver “significant cost savings” for ongoing use, Mach says. These customers then turn to on-demand “for the occasional overflow or if they have enough licensing and want to pay to not queue on hardware.”

Mach says they walk new customers through a set of questions to help them decide what services they need. “Because TotalCAE manages both infrastructures and eliminates the IT staffing and IT software costs, the costing decision comes down to infrastructure.” The questions are:

- How often do you run this workload? Do you run it every day, once a month or once a year? Is this a day-to-day workload, or a one-off workload?

- How many cores do you need to run this job at, and how long will the job take?

- Do you have the CAE licensing to run at that core count?

This last question is important in reaching a decision about costs, Mach notes. “If you are license-limited to 16 cores, then accessing large amounts of cores [on demand] may not be the right solution.”

Mach says if an engineering team plans to use HPC for more than 50% of their simulation workload, often the most cost-effective solution is reserved cloud or an existing on-premise cluster. “It will be substantially cheaper than on-demand,” Mach says. At a 25% usage rate, Mach says on-premise is usually cheaper than on-demand cloud. For occasional use, using on-demand resources will be cheaper. He also notes, “an on-prem lease is cheaper than reserved cloud instances for the same duration.”

Many companies already have existing compute resources and dedicated IT staff, so the clean-slate approach described doesn’t completely apply. This scenario requires a different set of questions.

Reserved Cloud Benefits

Adding reserved cloud to the simulation mix has many possible benefits. It is a dedicated resource, not shared with other users (as in-house HPC can be at times), or subject to peak pricing as with on-demand cloud.

Most cloud vendors offer optimization options when selecting a reserved cloud service. For example, AMD says its new EPYC 7003 CPU offers three times as much L3 cache, compared to its other 3rd Generation EPYC CPUs. Some simulation products thrive on the low-latency bandwidth of a larger cache, including Siemens Simcenter STAR-CCM+, as DE recently reported.

“Even if the CPU packs a serious processing punch, if the L3 cache they’re trying to retrieve data from isn’t big enough, they’ll have to go all the way to the RAM to get it instead,” Mach says. “This takes roughly an order of magnitude longer to retrieve than if it were available in the cache.” If the cloud vendor offers this CPU, and the customer is using STAR-CCM+ or another CAE product that needs high L3 cache, a deal can be struck.

Reserved cloud also offers the benefits, always mentioned by vendors, of better security (because it is run by a team of specialists) and cost savings. Most cloud vendors can strike a deal offering a lower cost-per-simulation run in a reserved setting, compared to the pay-as-you-go option.

Five Key Questions

In discussions with users and vendors and a review of existing research, we gathered a set of critical questions that may help engineering teams decide how to organize their HPC simulation infrastructure.

What is the primary use case? If the workload is time sensitive or requires low latency, on-premises may be a better option. If there is flexibility on deadlines and workloads, cloud may be a good choice.

What is the budget? On-premises infrastructure typically requires a larger upfront investment, while cloud infrastructure has the option of pay-as-you-go pricing or a reserved cloud model described earlier.

What is the required scale? If the workload requires a large-scale HPC infrastructure, the cloud may be more cost-effective. If the workload is small or only intermittent, on-premises may be a better option.

What are the data security and compliance requirements? If data security and compliance are a high priority, on-premises infrastructure may be a better option as it offers more control over the physical infrastructure. Some vendors now offer certified services for high-security computation. Engineering cloud services vendor Rescale, for example, offers System and Organization Controls 2 Type 2 attestation, Cloud Security Alliance registration, and an International Traffic in Arms Regulations-compliant environment. Rescale works with several leading cloud services vendors.

Engineering computation specialist Rescale features several Ansys products. Image courtesy of Rescale.

What is the level of expertise and resources available? Does the company have the necessary expertise and resources in-house to manage an on-premises HPC infrastructure? A team of start-up engineers with a great idea and an established manufacturing company come to this question from two different directions. The startup doesn’t have to spend precious resources on hardware and IT support; the large company already has both.

Trading Time for Money

Golder Associates is a consulting engineering service recently acquired by WSP. It has clients in manufacturing, oil & gas, mining, transportation, government and power. DE recently talked with Golder’s computational fluid dynamics (CFD) lead W. Daley Clohan about the company’s use of HPC for simulation.

“I prefer to use on-demand cloud HPC resources, but I also use on-premise HPC resources when needed,” Clohan says. “The business model for consulting engineering essentially boils down to trading time for money.“

Golder Associates uses on-premise and in-cloud high-performance computing to run complex simulations for clients in various industries including manufacturing. Image courtesy of Golder Associates.

Clohan says the introduction of HPC was “a game changer” for scientific and engineering modeling. HPC clusters can “efficiently run increasingly more complex, integrated multi-phase and/or longer simulations than ever before using cutting-edge computational resources.”

Clohan notes there is a downside to HPC. “Building and maintaining an HPC resource is no trivial endeavor. It requires both upfront capital and an annual operating budget to keep the system running and modern during mission-critical usage,” he says.

Staffing is also a challenge; the people needed to run HPC resources “are rather niche and challenging to find.”

By running on-premise and in-cloud HPC, Clohan says Golder gets the best of both worlds. Yet there are pros and cons for both options. “The main advantage with using third-party cloud HPC resources is that maintenance, technical troubleshooting and modernization initiatives are dealt with by the vendor,” Clohan says. The drawback? “Users have limited control over the architecture of third-party HPC resources.”

On-premise HPC gives Golder “complete control over the resource.” The obvious drawback is “all the background support required to keep it running.”

Golder uses HPC resources to run simulations of all sizes. “I do not overly pay attention to the geometrical size of my simulations or the number of cells in my simulation. I am more concerned with runtimes.” Clohan says. For example, a microfluidic simulation might be geometrically small, yet require many small cells, “while a coastal flooding simulation may be geometrically large, but only require relatively large cells.”

Clohan has developed a working rhythm regarding simulation run times. He prefers simulation run times of less than 6 hours “or at least 18 hours + 24 hrs * (n days) < run time < 24 hrs + 24 hrs * (n days).” Clohan says “this allows me to view and manage my simulations during working hours, while letting the model run while I am working on other tasks or spending time with my family.”

Golder primarily uses three simulation products, Siemens FLUENT and STAR-CCM+, and the eponymous FLOW-3D. The company does not have any in-house apps.

As Clohan explains, “I tend to get more involved with customizing existing simulation codes,” than to create new routines from scratch.

More AMD Coverage

More TotalCAE Coverage

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Randall S. Newton is principal analyst at Consilia Vektor, covering engineering technology. He has been part of the computer graphics industry in a variety of roles since 1985.

Follow DE